Hey there, tech enthusiasts! If you've been diving into the world of cloud computing and IoT, you're probably wondering how to leverage AWS for batch processing in a RemoteIoT setup. Today, we’re going to break it down step by step. RemoteIoT batch job example in AWS is more than just a concept—it’s a game-changer for businesses looking to optimize their IoT workflows. Whether you're a seasoned developer or just starting out, this guide is packed with actionable insights and real-world examples to help you get started.

Picture this: you’ve got thousands of IoT devices generating data every second. How do you process all that information efficiently without breaking the bank? Enter AWS Batch—a powerful service designed to handle compute-intensive tasks with ease. In this article, we’ll walk you through everything you need to know about setting up and running a RemoteIoT batch job in AWS, complete with tips, tricks, and best practices.

So, grab your favorite beverage, sit back, and let’s dive into the nitty-gritty of RemoteIoT batch job examples in AWS. By the end of this article, you’ll have all the tools you need to take your IoT projects to the next level. Let’s get started, shall we?

Read also:Bruce Somers Jr The Rising Star In The Spotlight

Table of Contents

- Introduction to RemoteIoT Batch Job in AWS

- What is AWS Batch and Why Use It?

- Understanding RemoteIoT Batch Architecture

- Setting Up Your AWS Environment

- Step-by-Step RemoteIoT Batch Job Example

- Best Practices for AWS Batch Jobs

- Managing Costs in AWS Batch

- Scaling Your RemoteIoT Batch Jobs

- Troubleshooting Common Issues

- Wrapping Up

Introduction to RemoteIoT Batch Job in AWS

Alright, let’s kick things off with the basics. RemoteIoT batch job example in AWS is essentially about automating large-scale data processing tasks using cloud infrastructure. AWS Batch simplifies the process by dynamically scaling compute resources based on the volume of jobs in your queue. This means you don’t have to worry about over-provisioning or under-provisioning resources—AWS takes care of that for you.

But why should you care? Well, if you’re dealing with massive amounts of IoT data, traditional methods of processing can quickly become inefficient and costly. AWS Batch offers a scalable, cost-effective solution that integrates seamlessly with other AWS services like S3, Lambda, and IoT Core. Whether you’re processing sensor data, analyzing logs, or running machine learning models, AWS Batch has got your back.

Let’s take a quick look at some stats to drive the point home. According to a recent report by Gartner, enterprises that adopt cloud-based batch processing solutions see an average 30% reduction in operational costs. That’s not just a number—it’s a tangible benefit for businesses looking to stay competitive in today’s fast-paced digital landscape.

What is AWS Batch and Why Use It?

So, what exactly is AWS Batch? Simply put, it’s a managed service that helps you run batch computing workloads on AWS without worrying about the underlying infrastructure. It’s like having a personal assistant for your compute-heavy tasks, but way more efficient.

Key Features of AWS Batch

- Dynamic Scaling: Automatically adjusts compute resources based on job demand.

- Job Queues: Organizes and prioritizes jobs for efficient processing.

- Integration: Works seamlessly with other AWS services like EC2, ECS, and Fargate.

- Cost Optimization: Uses Spot Instances to reduce costs without compromising performance.

Why choose AWS Batch over other solutions? First off, it’s fully managed, which means less time spent on maintenance and more time focused on innovation. Plus, its tight integration with the AWS ecosystem makes it a natural fit for IoT workloads. Need to process data from thousands of sensors? AWS Batch can handle it with ease.

Understanding RemoteIoT Batch Architecture

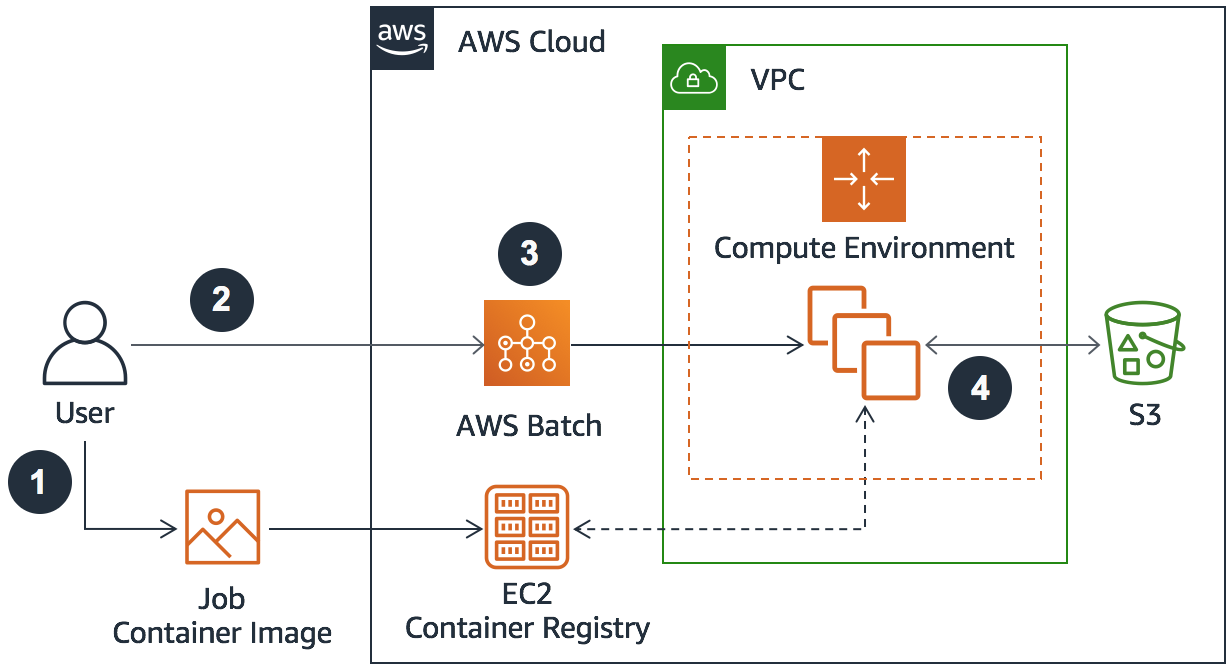

Now that we’ve covered the basics, let’s dive into the architecture of a RemoteIoT batch job in AWS. At its core, the architecture consists of three main components:

Read also:Frankie Muniz Age A Deep Dive Into The Life And Legacy Of A Beloved Star

1. Data Collection

This is where your IoT devices come into play. Devices like sensors, cameras, and smart meters send data to AWS IoT Core, which acts as the central hub for all your IoT communications. From there, the data is routed to S3 buckets or Kinesis Data Streams for further processing.

2. Batch Processing

Once the data is collected, AWS Batch takes over. Jobs are submitted to a queue, where they’re prioritized and executed based on resource availability. You can define job definitions, specify compute environments, and even set up dependencies between jobs.

3. Data Storage and Analysis

After processing, the results are stored in S3 or sent to analytics tools like Redshift or Athena for deeper insights. This step is crucial for deriving actionable intelligence from your IoT data.

Here’s a quick diagram to help visualize the flow:

Data Collection → Batch Processing → Data Storage & Analysis

Setting Up Your AWS Environment

Ready to get hands-on? Let’s walk through the steps to set up your AWS environment for RemoteIoT batch jobs. First things first, you’ll need an AWS account. If you don’t have one yet, sign up for a free tier account to get started.

Step 1: Create an IAM Role

Security is key, so start by creating an IAM role with the necessary permissions for AWS Batch and IoT Core. Make sure to attach the appropriate policies, such as AmazonS3FullAccess and AWSBatchFullAccess.

Step 2: Set Up an S3 Bucket

Your S3 bucket will serve as the storage location for your IoT data. Create a new bucket and configure it to enable versioning and server-side encryption for added security.

Step 3: Configure AWS Batch

Next, set up your compute environment and job queue in AWS Batch. Choose between EC2 or Fargate for your compute resources, depending on your workload requirements. Don’t forget to define your job definitions and specify any dependencies.

Pro tip: Use Spot Instances to save on costs while still maintaining high performance.

Step-by-Step RemoteIoT Batch Job Example

Let’s put theory into practice with a real-world example. Imagine you’re working for a smart agriculture company that uses IoT sensors to monitor soil moisture levels. Here’s how you can set up a RemoteIoT batch job in AWS to process this data:

Step 1: Define Your Job

Create a job definition that specifies the Docker image, memory requirements, and any environment variables needed for your batch job. For instance, you might use a Python script to analyze the sensor data and generate insights.

Step 2: Submit the Job

Once your job definition is ready, submit it to the job queue. AWS Batch will automatically provision the necessary compute resources and execute the job based on your specifications.

Step 3: Monitor Progress

Use the AWS Management Console or CLI to monitor the progress of your batch job. You can view logs, check resource utilization, and even troubleshoot issues if something goes wrong.

Best Practices for AWS Batch Jobs

Now that you’ve got the hang of setting up RemoteIoT batch jobs in AWS, here are some best practices to keep in mind:

- Optimize Resource Allocation: Use Spot Instances and Reserved Instances to reduce costs.

- Automate Everything: Leverage AWS Step Functions to automate workflows and reduce manual intervention.

- Monitor Performance: Use CloudWatch to track metrics and set up alerts for potential issues.

- Secure Your Data: Enable encryption and access controls to protect sensitive IoT data.

Remember, the key to success is experimentation. Don’t be afraid to try new things and tweak your setup until you find what works best for your use case.

Managing Costs in AWS Batch

Cost management is a critical aspect of any cloud deployment. With AWS Batch, you have several options to keep costs under control:

1. Use Spot Instances

Spot Instances can reduce your compute costs by up to 90%. While there’s a risk of interruption, most batch jobs can tolerate this since they’re designed to be stateless.

2. Leverage Reserved Instances

If you have predictable workloads, consider purchasing Reserved Instances for a significant discount on EC2 usage.

3. Optimize Job Definitions

Make sure your job definitions are optimized for resource usage. Avoid over-provisioning memory or CPU, as this can lead to unnecessary costs.

By following these strategies, you can ensure that your RemoteIoT batch jobs are both efficient and cost-effective.

Scaling Your RemoteIoT Batch Jobs

As your IoT deployment grows, so will your data processing needs. AWS Batch makes it easy to scale your batch jobs to handle increasing workloads. Here are a few tips to help you scale effectively:

1. Use Auto Scaling

Enable Auto Scaling to dynamically adjust the number of compute instances based on job demand. This ensures that you’re always running at optimal capacity.

2. Partition Your Data

Break down large datasets into smaller chunks to improve processing efficiency. This approach also makes it easier to parallelize tasks and reduce overall processing time.

3. Monitor Performance Metrics

Keep a close eye on metrics like CPU utilization, memory usage, and job completion times. Use this data to fine-tune your scaling policies and improve performance.

Scaling doesn’t have to be a headache—AWS Batch handles most of the heavy lifting for you. Just focus on optimizing your workflows and let the service do the rest.

Troubleshooting Common Issues

Even the best-laid plans can hit a snag now and then. Here are some common issues you might encounter when working with RemoteIoT batch jobs in AWS, along with solutions to help you overcome them:

1. Job Failures

If a job fails, check the logs in CloudWatch for error messages. This will give you insight into what went wrong and how to fix it.

2. Resource Limits

Running into resource limits? Increase your quotas in the AWS Management Console or consider using Spot Instances to handle peak loads.

3. Performance Bottlenecks

If your jobs are taking longer than expected, review your job definitions and compute environments. Optimizing these settings can often lead to significant performance improvements.

Remember, troubleshooting is part of the process. Don’t get discouraged if things don’t work perfectly the first time—learn from your mistakes and keep improving.

Wrapping Up

And there you have it—a comprehensive guide to RemoteIoT batch job example in AWS. From setting up your environment to scaling your workloads, we’ve covered everything you need to know to get started with AWS Batch for IoT projects.

To recap, here are the key takeaways:

- AWS Batch simplifies batch processing by dynamically scaling compute resources.

- Integrating with other AWS services like IoT Core and S3 creates a seamless IoT workflow.

- Best practices like optimizing resource allocation and automating workflows can significantly improve efficiency.

- Cost management and scaling are essential for long-term success in cloud-based IoT deployments.

So, what are you waiting for? Dive in and start experimenting with RemoteIoT batch jobs in AWS. And don’t forget to share your experiences and feedback in the comments below. Your insights could help others on their journey to mastering AWS Batch!